Tim Palmer says that we must pool our resources to produce high-resolution climate models that societies can use, before it is too late

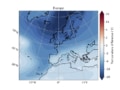

Melting of polar ice is accelerating our planet’s angular velocity

Read article: Atlantic current circulation could shut down, say climate scientists

Read article: Atlantic current circulation could shut down, say climate scientists

The collapse of the main current that warms Europe would have far-reaching consequences for ecosystems as well as human civilization

Read article: Weathering and ocean burial of rocks could have triggered Earth’s ice ages

Read article: Weathering and ocean burial of rocks could have triggered Earth’s ice ages

Layered clays called ophiolites trap carbon efficiently and may still be cooling the planet today

Read article: Mystery of how dolomite forms could be solved at long last

Read article: Mystery of how dolomite forms could be solved at long last

Computer simulations and electron microscopy reveal mineral’s secret

Read article: Stratospheric effect boosts global warming as carbon dioxide levels rise

Read article: Stratospheric effect boosts global warming as carbon dioxide levels rise

New research explains variations in climate-model predictions

Read article: Iron atoms in Earth’s inner core are on the move

Read article: Iron atoms in Earth’s inner core are on the move

Result could shed light on the seismic and geodynamic processes at play deep inside our planet

Read article: Flash heating technique extracts valuable metals from battery waste quickly and cheaply

Read article: Flash heating technique extracts valuable metals from battery waste quickly and cheaply

New way of recovering material from lithium-ion devices also requires much less acid than other methods

Connecting you to the most comprehensive, authoritative and up-to-date research in this globally-expanding field.

Browse the most recently published titles.

Johan Hansson argues that it will never be possible to meet our climate targets if countries continue their obsession with growing the economy

James McKenzie looks at the opportunities for physicists in the new “green economy”

Read article: Duke of Edinburgh visits Institute of Physics to hear how physicists are supporting the green economy

Read article: Duke of Edinburgh visits Institute of Physics to hear how physicists are supporting the green economy

Matin Durrani reports on the visit of the Duke of Edinburgh to the Institute of Physics

Read article: Graphene-based materials show great promise for hydrogen transport and storage

Read article: Graphene-based materials show great promise for hydrogen transport and storage

Materials expert Krzysztof Koziol also talks about hydrogen-powered aircraft

Read article: Electrochemical conversion of high-pressure carbon dioxide

Read article: Electrochemical conversion of high-pressure carbon dioxide

The Electrochemical Society in partnership with Scribner and Hiden Analytical explore an environmentally friendly approach to upcycling CO2 emissions

Read article: Award-winning technology allows a paralysed person to walk, new journal focuses on sustainability

Read article: Award-winning technology allows a paralysed person to walk, new journal focuses on sustainability

This podcast features a neuroscientist and a catalysis expert

Read article: Revolutionizing renewable energy: the promise of water splitting

Read article: Revolutionizing renewable energy: the promise of water splitting

IOP Publishing explores innovative solutions in renewable energy

Read article: Sustainable success demands joined-up thinking

Read article: Sustainable success demands joined-up thinking

Finding creative solutions to sustainability challenges requires input from diverse communities, argues the editor-in-chief of Sustainability Science and Technology

Read article: Why Alice & Bob are making cat qubits, IOP calls for action on net-zero target

Read article: Why Alice & Bob are making cat qubits, IOP calls for action on net-zero target

This podcast features a quantum entrepreneur and an energy expert

Read article: ‘Now is the time for action’ to reach net-zero climate targets, demands IOP report

Read article: ‘Now is the time for action’ to reach net-zero climate targets, demands IOP report

Most physicists believe that on current trends the UK will fail to hit its climate goals

Read article: Going carbon negative to address climate change

Read article: Going carbon negative to address climate change

The Electrochemical Society in partnership with Element6, BioLogic, Gamry Instruments and TA Instruments – Waters, explore delivering technologies that can reduce carbon impact

Read article: Dangerous soil liquefaction can occur away from earthquake epicentres in drained conditions

Read article: Dangerous soil liquefaction can occur away from earthquake epicentres in drained conditions

Discovery could also shed light on the history of earthquakes in a region

Read article: Microplastics with elongated shapes travel further in the environment

Read article: Microplastics with elongated shapes travel further in the environment

Atmospheric transport of microplastics is highly sensitive to particle shape and size

Read article: Bursting bubbles accelerate melting of tidewater glaciers

Read article: Bursting bubbles accelerate melting of tidewater glaciers

High-pressure air delivers kinetic energy to mixing fluids

Read article: Laser gyroscope measures tiny fluctuations in Earth’s rotation

Read article: Laser gyroscope measures tiny fluctuations in Earth’s rotation

Lab-sized instrument could shed light on shifting ocean currents

Read article: Organic molecule from trees excels at seeding clouds, CERN study reveals

Read article: Organic molecule from trees excels at seeding clouds, CERN study reveals

Sesquiterpene creates cloud-forming particles

Read article: Droplet-based ‘wind farms’ harvest low-speed wind energy

Read article: Droplet-based ‘wind farms’ harvest low-speed wind energy

Anchored droplets of ionic liquids collect enough charge to power a pocket calculator

Read article: Astrophysicist uses X-rays to explore the universe, heat pumps could prevent potholes

Read article: Astrophysicist uses X-rays to explore the universe, heat pumps could prevent potholes

This podcast features conversations with an astrophysicist and a civil engineer

Read article: Fallout from nuclear weapons testing explains the ‘wild boar paradox’ of radioactive meat

Read article: Fallout from nuclear weapons testing explains the ‘wild boar paradox’ of radioactive meat

Persistently high levels of caesium-137 in European wild boar are a legacy of early Cold War nuclear testing, not just Chornobyl, say researchers